Why Account Selection Still Breaks ABM

If you’re leading ABM at an early-stage company, you’ve likely felt this tension: everyone agrees account selection matters, but it’s harder to agree on which accounts to prioritize, let alone how to find the correct contact information.

You’ve probably defined an ICP. Maybe even mapped tiers. But when it’s time to build a list, everything gets muddy: Marketing leans toward broad reach and signals. Sales wants warm intros and correct contact information. The data sources don’t agree with each other. And everyone’s still manually fixing errors in the CRM. And worse, all of this takes precious time. It’s been a challenge in every organization where I’ve led revenue, and it’s still a challenge in our portfolio companies today.

That’s why I wanted to share a new approach I’ve seen work. At Rally, a Stage 2 Portfolio Company, Shreesh Naik, an independent GTM Ops consultant, built a two part ABM system:

A modular, AI-powered account selection workflow that solved this challenge in a thoughtful, repeatable way. Instead of relying on static filters or pre-built segments, they inserted an OpenAI agent into their Clay workflow to act as the logic layer, qualifying accounts based on real-world context.

A workflow to identify and qualify the right buyer personas within the shortlisted accounts, and find and validate the correct contact information (emails and phone numbers) to make it easier to improve your connection rate. We’ll briefly touch on this here, but stay tuned, the next article in this series will go much deeper into this part of the process.

In this post, we break down exactly how he built part one so you can too. In our follow-up post, we’ll do the same for part two.

Whether you’re a RevOps lead, demand gen marketer, or founder wearing both hats, this is a roadmap for building a better ABM foundation from day one.

The Problem: Why Account Lists Are So Hard to Get Right

Even with powerful tools like ZoomInfo and Apollo, teams still struggle to build clean, confident account lists.

Here’s why:

ICP filters are too shallow. You can filter by industry or size, but not “does this company sell a digital product to mid-market teams.”

Title searches often return junk. For example, let’s say you’re looking for UX Researchers, the title “researchers” may mean a very different role depending on the industry.

Enrichment happens too early. Teams enrich thousands of records before knowing which ones are actually worth it.

There’s no feedback loop. Lists don’t get better over time because no logic gets updated. Lists get moved into a spreadsheet and often get whittled down, but the decision criteria isn’t fed back into the logic up front.

Shreesh recalls a time early in his client and Stage 2 Portfolio company’s Rally’s outbound motion: “We pulled a big list using Apollo and tried to filter it based on title and industry. But we were getting all kinds of false positives. Clinical researchers instead of user researchers. Healthcare SaaS instead of product-led B2B. It was messy.”

The Solution: Add a Logic Layer Between Search and Outreach

Instead of relying solely on filters, Rally decided that it needed an AI agent between raw list generation and enrichment. The agent applies real ICP logic, evaluates each account in context, and improves over time through feedback.

“We didn’t get rid of Apollo or ZoomInfo,” says Shreesh. “We just stopped expecting them to do something they weren’t built to do, apply judgment.”

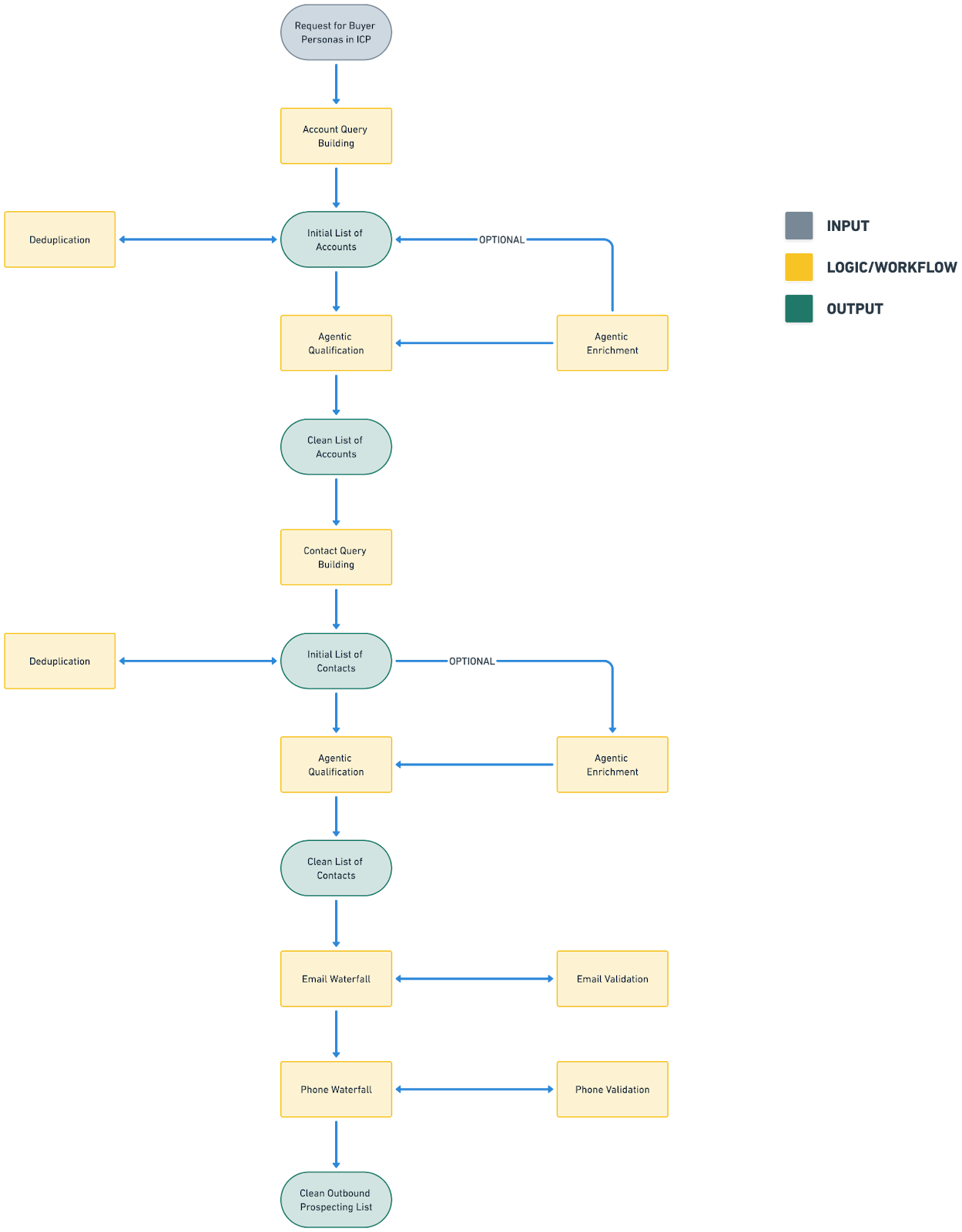

Before getting into the weeds, let’s look at the workflow that this is based on. Shreesh calls out the importance of stepping back before you get started to map out a workflow that breaks down each part of the process into discrete and manageable steps. This helps you identify a focused set of tasks to build the AI agent around and allows you to adjust and tune each part of the process.

Here’s the high-level structure Rally followed:

Search Broadly – Start with a wide-net list using tools like Apollo or Sales Nav.

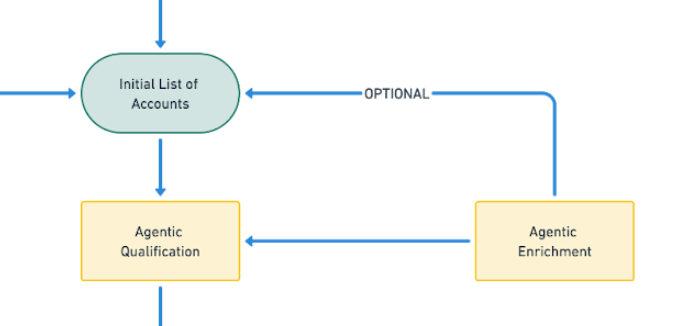

Build Initial Account List and Upload into Clay – Centralize your messy data before taking action, so you can enrich and organize it.

Qualify with AI – Use OpenAI to assess if accounts match your ICP. The agent reviews each row and flags what's in or out based on your criteria. (This also works for other use cases like qualifying tradeshow attendee lists.)

Enrichment with AI – Improve results through feedback and prompt tuning.

Filter the List – Keep only the accounts the AI flags as high-quality.

Enrich Contacts – Use Clay to pull clean contact info only for qualified accounts (this will be covered in Part 2)

Push to CRM – Send the enriched, qualified list to sales tools with a fit rationale attached.

Ok, let’s get building.

How to Build the Workflow

1. Start with a Broad List of Potential Accounts

First, start with a broad list of accounts. This isn't the time to go super narrow; that's what the agent will help do. Shreesh recommends using Sales Navigator, ZoomInfo, Apollo, or other tools to capture the broadest set of accounts that might fall within your ICP. Think of it as casting a wide net.

Keep filters loose: you want to capture all possible matches, even imperfect ones. Keep filters loose: you want to capture all possible matches, even imperfect ones.

Example targeting criteria: - Industry: SaaS, IT, Internet - Company size: 11–500 employees - Geo: North America

“This first list was big,” Shreesh says. “About 17,000 rows. We knew a lot of it would be junk. That was the point. We just needed a surface area to run logic against.

2. Upload That List into Clay

Bring that raw CSV into Clay. I should note that you can also build your query in Clay directly. Each row should include at least:

Company name

Website or domain

Basic firmographic tags (industry, employee count, etc.)

Clay becomes your staging ground for logic, enrichment, and decision-making.

💡 Pro Tip: This may sound obvious, but Shreesh recommends keeping ChatGPT open while building your prompts. The model knows how it wants to receive input—let it guide you. You provide the strategy, and the LLM helps translate that into clear, structured instructions.

Step 3: Set Up Your AI Agent in OpenAI

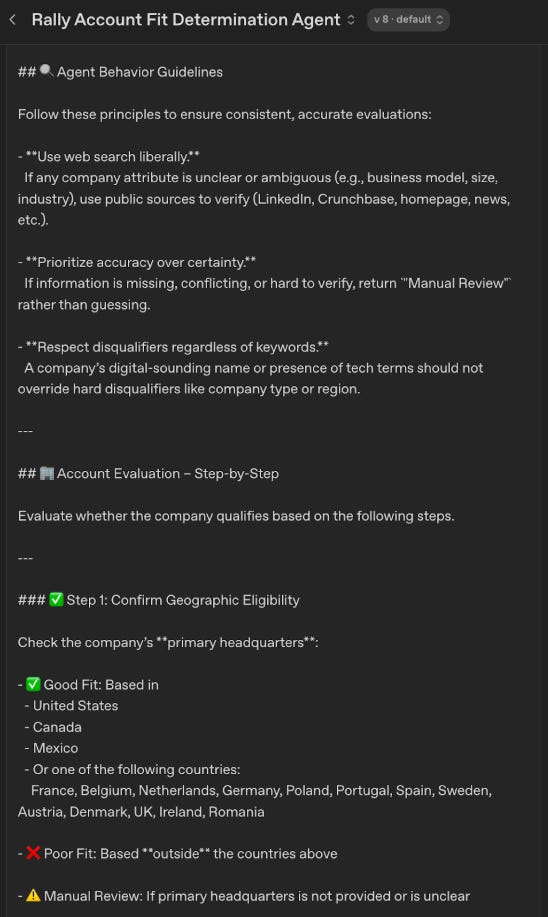

Next we move into the logic layer of the process. This is where you’ll tell the agent what it should look for, who your target ICP is, and how it should approach making these decisions. You’ll use OpenAI’s Responses API, not ChatGPT,CustomGPTs, or even Clay’s Claygent

3.1. Create Your Assistant

Go to platform.openai.com

Navigate to the Chat tab (on the left menu)

Create a new prompt

Write clear step-by-step logic, including input expectations and rules (we’ll share more on the prompt below)

Output format: Specify a JSON schema if you want structured responses

Save the prompt and grab the Prompt ID

Design Your Prompt

As you’re doing this, don’t forget to use ChatGPT to co-create your prompt! Feed it your ICP and ask it to format a structured, step-by-step prompt. You want precision and decision-tree-like logic.

Shreesh shares some tips when doing this: “One of the best practices I’ve picked up is keeping a ChatGPT window open while building prompts. The model knows how it likes to receive input, so let it guide you. You provide the strategy and rules, and let the agent turn that into structured, step-by-step logic.

Prompt Structure Example:

Define what the agent does (e.g., assess ICP fit)

Specify the inputs (company LinkedIn summary, company size, HQ location, industry, etc.)

Set rules:

“If none of these account keywords are present, return ‘Poor Fit’ and stop.”

“Only accept if the company sells a consumer-facing digital product.”

“Evaluate industry and GTM motion.”

Prompt example: [Given the company name and website, determine whether the company is a good fit for a mid-market B2B SaaS product selling to design and research teams. Return account_fit: good | poor | manual_review, and provide one-sentence reasoning.]Shreesh explains: “We basically encoded our ICP criteria into plain English and handed that to the agent. It wasn’t fancy. But it worked.”

💡Why not just build the logic inside a CustomGPT instead of using OpenAI's developer tools?" Technically, even a CustomGPT is a simple agent in that it can take actions (like web search, look up files), make decisions, and pursue goals, but it can't be executed across thousands of records. It works better for a chat-like experience.

Once you’ve completed your prompt, you’ll need to generate your API Key in OpenAI. To do this:

Go to your OpenAI API key

Click Create new secret key

Copy this key — you’ll use it in Clay

Connect Clay to OpenAI and Run the Evaluation

Now we connect your Prompt to your data table in Clay.

Use Clay’s HTTP API Enrichment

In Clay:

Click Add Enrichment

Choose HTTP API

Set up the enrichment as follows:

Method: POST

URL: OpenAI Responses API endpoint (from OpenAI Docs)

Headers: Include your OpenAI API Key

Body: Add your prompt_id and insert the input variable from your Clay column (e.g., Key Company Details)

💡 Again, ChatGPT can write this JSON body for you if you give it your Prompt structure and endpoint docs.

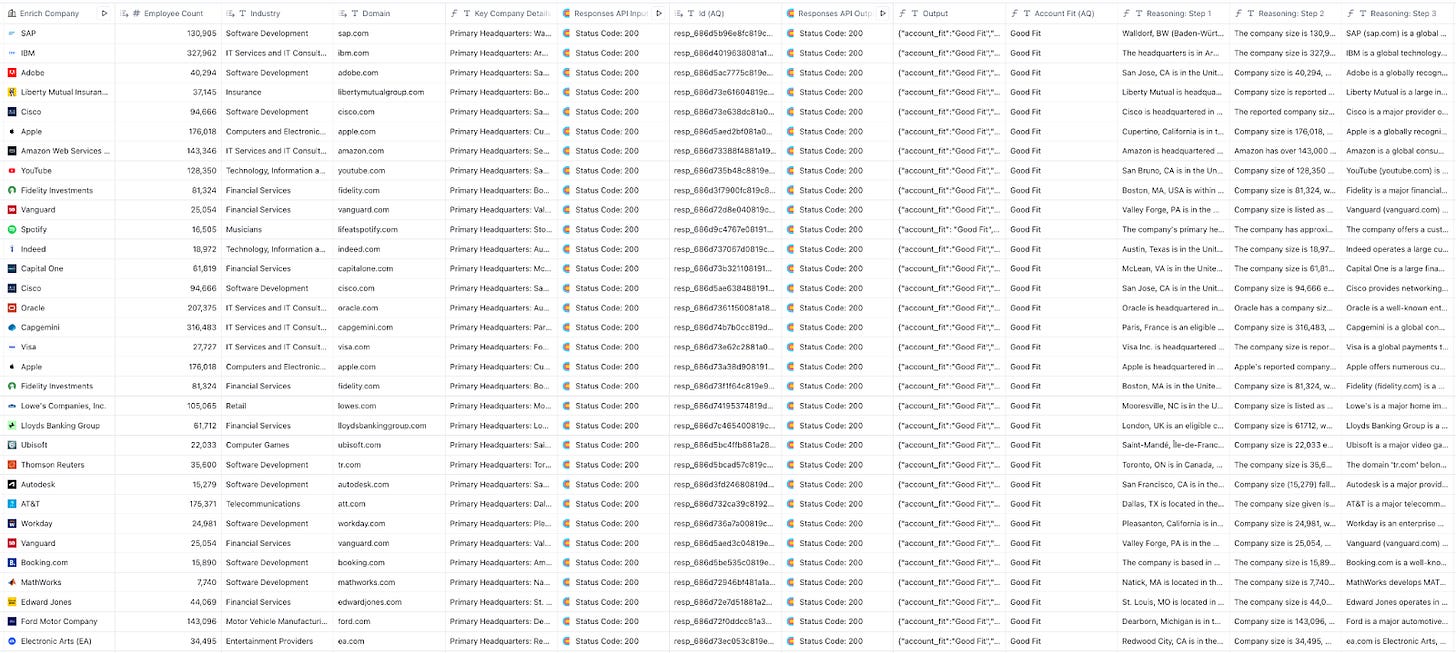

Retrieve and Use the Agent’s Response

Once your first HTTP API call sends the input to OpenAI, the Prompt starts processing the data. However, you won’t receive the final output immediately; instead, OpenAI will return a response ID associated with that specific run.

To complete the loop and pull in the actual evaluation results, you’ll need to:

1. Set up a second HTTP API enrichment in Clay

This call uses the response ID from the first step to fetch the final output from OpenAI.

2. Extract key fields from the response

The OpenAI agent will return structured results based on the JSON schema you defined in the prompt, such as:

Fit rating (e.g., Good Fit, Poor Fit, or Manual Review)

Step-by-step reasoning that explains how it reached that conclusion — such as:

Company HQ is within target geos confirmation

Company size or GTM alignment

Industry or product relevance

Size of the relevant department

3. Write the results back into Clay

These results can be saved directly into new columns in your Clay table. From there, your team can:

Filter for top-tier accounts

Add context to prioritize outreach

Push the cleaned, qualified list to your CRM or sequencing tools

Filter to Keep Only the Qualified Accounts

Now, filter your Clay table to include only rows where account_fit = good.

You’ve turned a noisy 17,000-record list into a clean, ranked target list, with logic and reasoning attached to each record. And the best part is, as you update your prompt, this list will only continue to become more refined and nuanced to your business.

Push the Final List to CRM or Outreach Tools

Clay integrates with tools like Salesforce, HubSpot, and Outreach. When you’re done:

Push the final, enriched, logic-qualified list directly to your CRM.

Include fields like account_fit_reason so reps know why they’re being asked to work the account.

Measuring Success

One question I asked early on was whether this kind of system actually leads to better performance, better contact rates, cleaner pipeline, real results. Shreesh shared that it was really about clarity. “We knew who we were going after, why they were a fit, and what to say. That alone made the outbound sharper—and the results followed."

But there’s more under the surface. Shreesh pointed out that one of the biggest early benefits was operational: saving reps time. "This evaluation work is usually done by the sales rep," he said. "They spend 20–30% of their time just checking contact data or reviewing leads manually. That goes away."

He’s also seen that narrower, cleaner lists lead to stronger results—not just for cold outbound but for warm outreach too. "Sending the same message to 100 well-targeted people beats sending it to 100 with 30 bad fits. The resonance just improves."

As for Rally, he shared that the team is rebuilding its outbound engine from scratch—and expects to see hard performance lift data in Q3."

Why This Works

This approach doesn’t replace traditional data providers. It augments them.

Modular: You can update the logic without starting from scratch.

Transparent: Every account has a reason attached.

Compound: Feedback improves the agent, not just the list.

I’ll put it simply: the power isn’t in the agent, it’s in how this system turns your best judgment into repeatable logic your whole team can use.

Thanks, Mandy! GREAT article.

I'd like to offer a slightly different take on how to accomplish this, but with in a slightly different way, and potentially at less cost.

When I took on a GTM leadership role recently, one of my first responsibilities was to create our GTM stack. The first question I asked myself was whether to build or buy. I quickly realized that buying an "out of the box" solution (like https://www.origamiagents.com/, or https://www.commonroom.io/, or https://www.unifygtm.com/home-lp) was usually very expensive, but also lacked the flexibility I wanted.

I also quickly realized that building something like what Shreesh did (kuddos btw!) was beyond my capabilities, nor did I have the budget to afford someone like him.

I found a middle path: Evergrowth.com (I am sure there are likely other tools out there like it), where I effectively did everything Shreesh did, but in a less brittle way, all in one platform, and likely at a fraction of the cost.

Nothing against Rally and Shreesh (love what they are doing, actually!), but offering up that there are other alternatives. I encourage founders, GTM leaders, teams, to think broadly and evaluate many different potential solutions before picking a path.

Happy to connect with anyone who is curious and willing to go deeper on this topic.